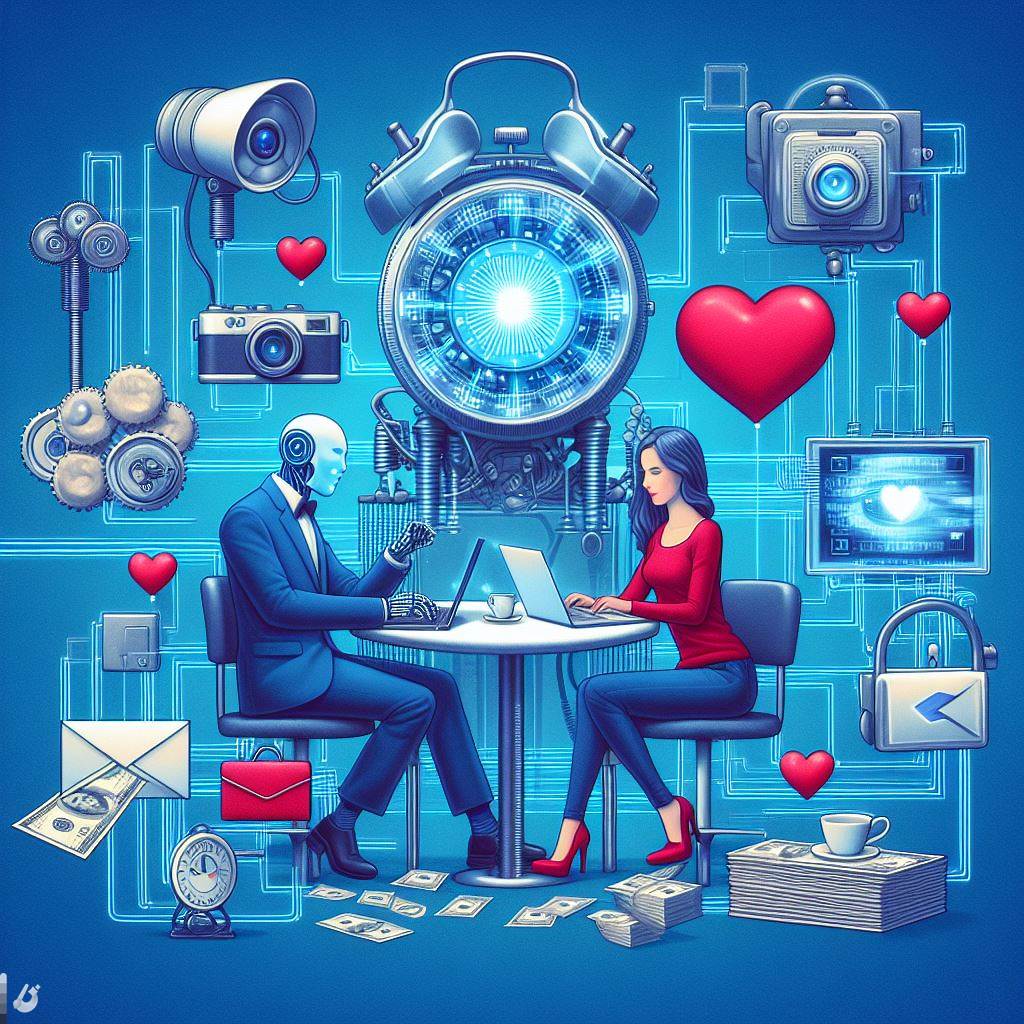

In the ever-evolving landscape of cyber threats, a new menace is emerging, driven by the power of artificial intelligence (AI). From dating apps to phishing emails, malicious actors are leveraging AI to orchestrate scams of unprecedented sophistication, warns cybersecurity expert Kevin Gosschalk, CEO of Arkose Labs. According to Gosschalk, "2024 will be the year of the AI-generated scam, at scale," ushering in a new era of digital deception.

Dating apps, once considered a safe space to connect with potential partners, are now infiltrated by scammers who employ AI-driven bots to create a multitude of fake accounts. These bots engage victims in seemingly genuine conversations, luring them into a web of deceit. As Gosschalk explains, scammers use AI to "perfectly speak to a person to the point where they feel like the victim is kind of on the hook." Subsequently, human operators take over to execute the final steps of the scam, manipulating victims into parting with their money.

The emotional toll on victims is significant, especially as scammers exploit the trust developed in what victims believe to be genuine relationships. The prevalence of this trend has escalated in recent months, leaving individuals emotionally devastated and financially exploited.

Phishing scams, too, have evolved with the integration of AI. Traditionally characterized by broken English, these scams have now become more sophisticated, using generative AI to craft messages with impeccable grammar and convincing authenticity. This technological advancement makes it increasingly challenging for individuals to discern between legitimate and fraudulent communications.

Beyond the realm of personal relationships, AI is being weaponized in e-commerce fraud. Unscrupulous sellers are utilizing AI to generate realistic reviews, bolstering their reputation and sales. Moreover, instances of fake, AI-generated product listings on e-commerce platforms have been reported, deceiving consumers into purchasing items that often bear little resemblance to what was depicted.

The threat extends beyond the individual level, with scammers utilizing AI in deep fakes created from recorded voices to impersonate CEOs and manipulate employees. Concerns arise as companies fear that their executives' voices could be exploited for social engineering tactics, further emphasizing the need for robust cybersecurity measures.

Looking ahead, Gosschalk anticipates a surge in AI-generated scams in 2024, particularly with the U.S. presidential election on the horizon. The projection suggests that bad actors will leverage AI to orchestrate sophisticated influence campaigns, spread misinformation, and sow confusion among the public regarding political issues and candidates.

While the current high cost of computing power has somewhat restrained the widespread adoption of AI in scams, experts predict a decline in these costs, paving the way for an alarming increase in AI-driven cyber threats. As technology continues to advance, individuals and businesses must remain vigilant, implementing robust cybersecurity measures to thwart the ever-evolving tactics of malicious actors in the digital realm.