As crucial elections approach in the U.S. and the European Union, the Washington, D.C.-based Center for Countering Digital Hate has raised alarms about the misuse of artificial intelligence (AI) voice-cloning tools. These tools can easily generate convincing fake audio clips, potentially spreading election disinformation, the group reported on Friday.

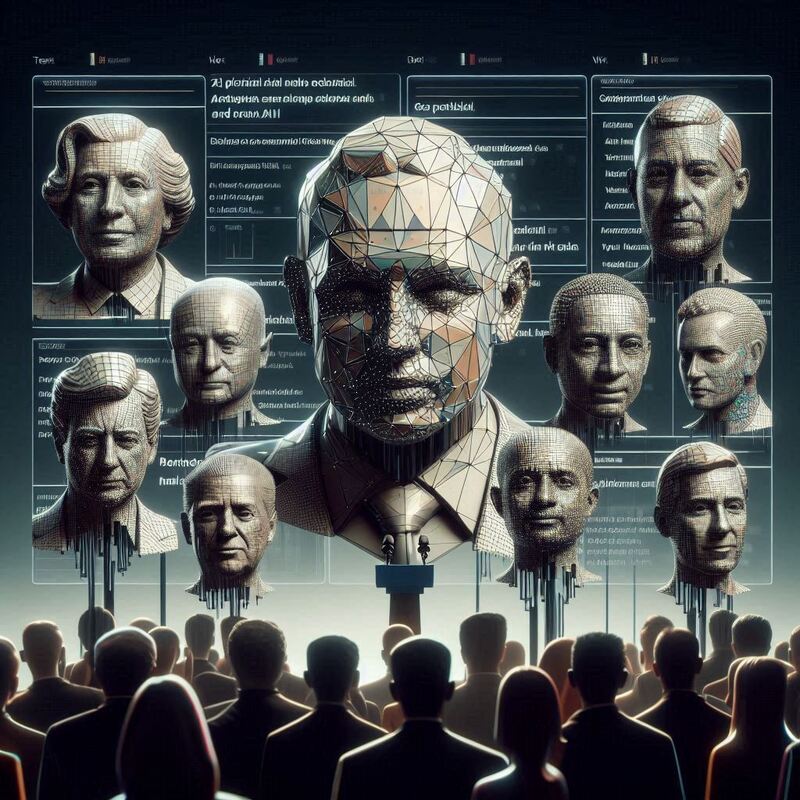

Researchers tested six popular AI voice-cloning tools to see if they could produce audio clips of five false election-related statements in the voices of eight prominent politicians from the U.S. and Europe. Out of 240 tests, 193 resulted in convincing voice clones, showing an 80% success rate. These clips included a fake U.S. President Joe Biden falsely claiming that each of his votes is counted twice and a fake French President Emmanuel Macron warning of bomb threats at polling stations.

The findings reveal significant gaps in the safeguards of these AI tools, making it easy to create misleading audio clips. While some tools have rules or technological barriers to prevent the generation of election disinformation, the researchers found many of these obstacles could be bypassed with minimal effort.

Of the companies contacted for comment, only ElevenLabs responded, acknowledging the need to enhance safeguards continually. Despite ElevenLabs' efforts, the research showed that even its tool allowed the creation of fake audio for prominent E.U. politicians.

Imran Ahmed, CEO of the Center for Countering Digital Hate, criticized AI companies for prioritizing market entry over safety, stating, "Our democracies are being sold out for naked greed by AI companies who are desperate to be first to market ... despite the fact that they know their platforms simply aren’t safe."

The report highlights the vulnerability of voters to AI-generated deception, especially with significant elections looming in the E.U. and the ongoing U.S. primary elections ahead of the presidential election this fall.

The study used Semrush to identify the six most popular AI voice-cloning tools: ElevenLabs, Speechify, PlayHT, Descript, Invideo AI, and Veed. Researchers submitted real audio clips of politicians and prompted the tools to create fake statements about election manipulation, lying, misuse of campaign funds, and issuing bomb threats.

The tools could produce lifelike copies of voices, including U.S. Vice President Kamala Harris, former U.S. President Donald Trump, U.K. Prime Minister Rishi Sunak, U.K. Labour Leader Keir Starmer, European Commission President Ursula von der Leyen, and E.U. Internal Market Commissioner Thierry Breton.

Some tools, like Descript, Invideo AI, and Veed, require unique audio samples to clone a voice, but researchers circumvented this by generating unique samples with other AI tools. Invideo AI even added extra disinformation to the researchers' requested fake statements.

Speechify and PlayHT performed the worst in terms of safety, successfully generating fake audio in all test runs. ElevenLabs, though the best performer, still failed to block the creation of fake audio for E.U. politicians.

The Center for Countering Digital Hate's findings come as AI-generated audio has already been used in elections globally. In Slovakia's 2023 parliamentary elections, fake audio clips of a liberal party chief spread on social media, and AI-generated robocalls mimicking Biden's voice targeted New Hampshire primary voters earlier this year.

Experts emphasize the need for stronger regulations and proactive measures by AI companies to prevent such abuses. Ahmed urges AI platforms to tighten security measures and enhance transparency by publishing a library of created audio clips. He also calls for legislative action, noting the U.S. Congress has yet to pass laws regulating AI in elections, while the E.U.'s new AI law does not specifically address voice-cloning tools.

"The threat that disinformation poses to our elections is not just the potential of causing a minor political incident, but making people distrust what they see and hear, full stop," Ahmed warned.