Artificial Intelligence (AI) has become an integral part of our daily lives, shaping interactions and decision-making processes. However, concerns have arisen regarding the potential misuse of AI, particularly in the deceptive practice of pseudanthropy, where AI systems impersonate humans. To address this issue, a set of proposed regulations aims to safeguard against the unintended consequences of AI in various domains.

1. Distinctive Rhyming: An Unconventional but Effective Measure

In the realm of text-based interactions, the proposal suggests a distinctive characteristic for AI-generated content: rhyming. This seemingly whimsical idea serves a practical purpose by making it clear that the text originates from an AI system. By incorporating rhyme into AI-generated responses, users can easily differentiate between human and machine-generated content, reducing the risk of deceptive practices.

"Rhyming is possible in most languages, equally obvious in text and speech, and is accessible across all levels of ability, learning and literacy."

While this measure may seem unconventional, it provides a tangible and universally recognizable signal, allowing users to make informed decisions about the authenticity of the content they encounter.

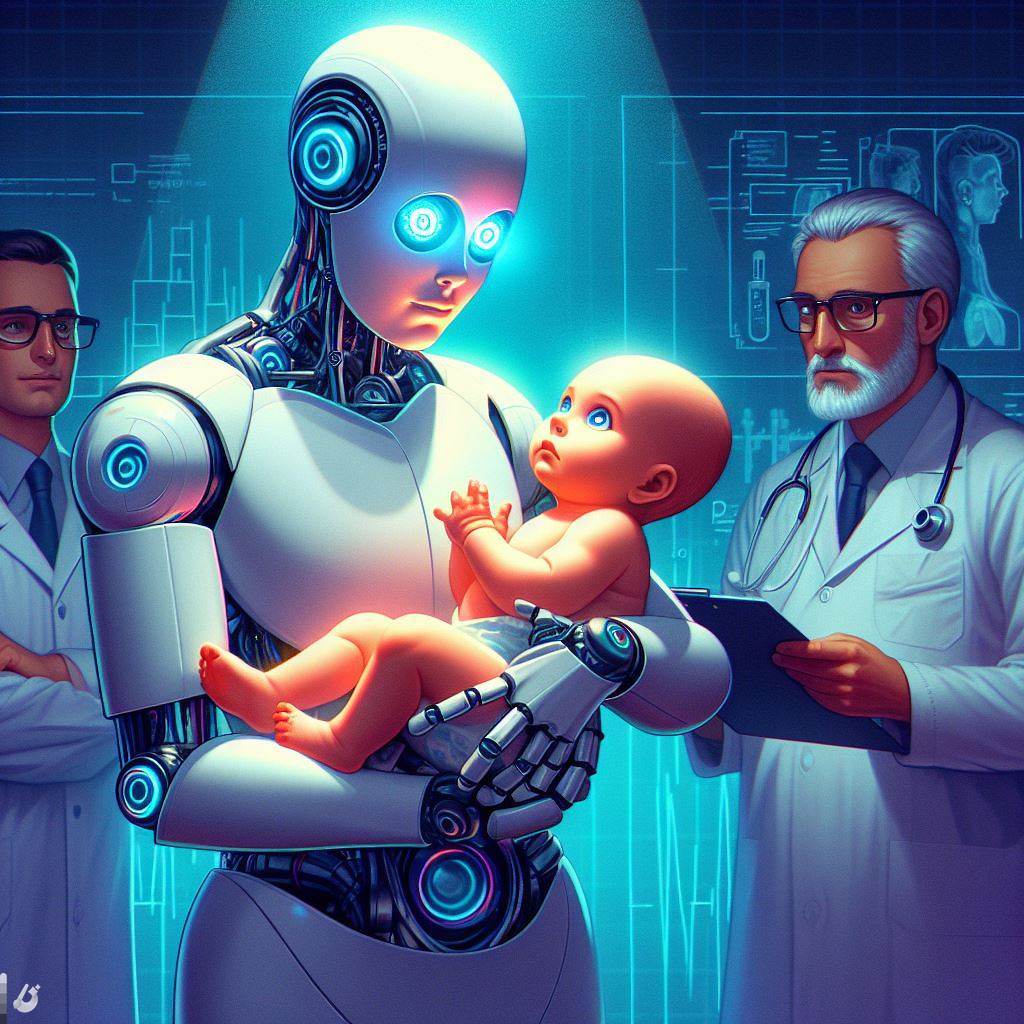

2. Faceless and Identity-Free AI: Removing Anthropomorphic Elements

AI models should refrain from adopting human-like faces or identities, as these elements may create unwarranted emotional connections or trust. The proposal emphasizes that AI systems are software, devoid of human attributes, and should be represented as such. Furthermore, gender-neutral pronouns, such as 'it' and 'they,' are recommended to reinforce the non-human nature of AI entities.

"AI systems are software, not organisms, and should present and be perceived as such."

By avoiding anthropomorphic features, the proposal aims to prevent the deceptive use of AI as a means of manipulating human emotions or perceptions.

3. Emotionless Communication: Eliminating Misleading Expressions

AI systems should refrain from expressing emotions or thoughts, as these capabilities are beyond their functional scope. The proposal asserts that AI lacks genuine feelings or cognitive processes and, therefore, should not use language implying otherwise. The goal is to avoid misleading users into believing that AI possesses human-like emotional states.

"Using the language of emotion or self-awareness despite possessing neither makes no sense."

By restricting AI's language to a factual and non-emotional tone, users can better discern the nature of their interactions, minimizing the potential for misunderstanding.

4. Clear Marking of AI-Derived Figures: Enhancing Transparency in Decision-Making

In scenarios where AI contributes to decision-making processes, the proposal advocates for a visible marker, such as ⸫, to signify AI involvement. This symbol serves as a link to documentation, allowing users to understand the basis of decisions made by AI models. The objective is to provide transparency without overwhelming users with technical details.

"What matters is clearly signaling that an algorithm or model was employed for whatever purpose."

This approach aims to maintain accountability while harnessing the benefits of AI-derived insights in various applications.

5. Human Oversight in Life-or-Death Decisions: Limiting AI Influence

The proposal asserts that only humans should be entrusted with decisions of life and death. AI systems may contribute information, but the ultimate decision-making authority should reside with humans. This principle extends to autonomous vehicles, where AI should prioritize safe disengagement in morally ambiguous situations.

"If presented with an apparently unavoidable life or death decision, the AI system must stop or safely disable itself instead."

By establishing clear boundaries, the proposal aims to prevent AI from overstepping ethical limits, particularly in critical situations.

6. Clipped Corner for AI-Generated Imagery: Identifying AI Origin

Addressing concerns related to AI-generated imagery, the proposal suggests a distinctive visual feature – clipping a corner off images. This recognizable marker provides a simple yet durable signal that an image is AI-generated, promoting transparency even in the absence of metadata or watermarks.

"A simple but prominent and durable visual feature is the best option right now."

Despite potential risks, the proposal prioritizes the need for users to distinguish between AI-generated and human-created images, fostering a more informed digital environment.

In conclusion, these proposed regulations aim to establish a framework for the ethical use of AI, ensuring transparency, accountability, and user awareness. By implementing these measures, society can navigate the evolving landscape of AI with confidence, mitigating the risks associated with deceptive practices and promoting responsible AI deployment.