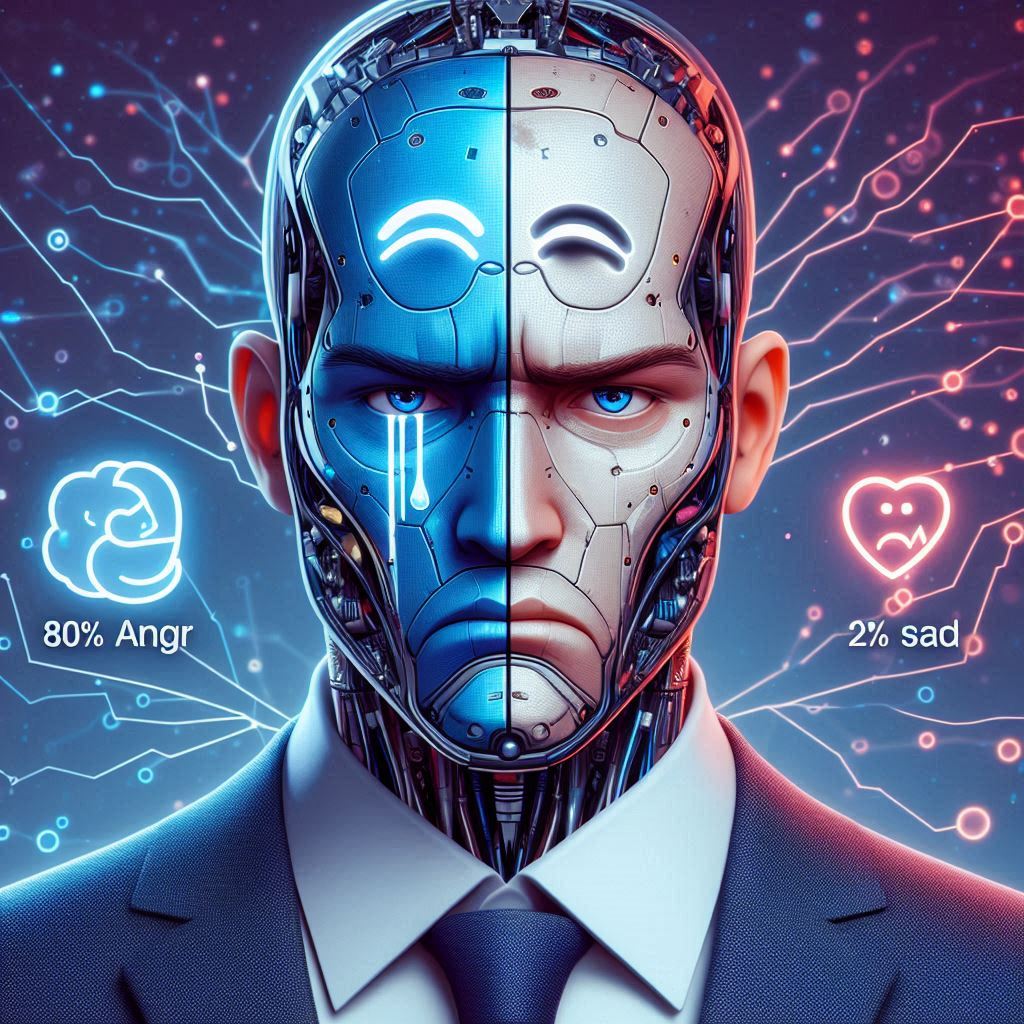

The burgeoning field of Emotional AI promises to revolutionize human-machine interactions by purportedly understanding and responding to human emotions. However, as advancements in this technology accelerate, concerns regarding its accuracy, ethical implications, and potential for misuse are increasingly prominent.

Hume, a Manhattan-based startup, claims to have developed "the world's first voice AI with emotional intelligence," leveraging large language models like OpenAI's GPT-4o to interpret emotional cues in speech. This capability, supported by a $50 million funding round, highlights the growing commercial interest in Emotional AI, projected to surpass $50 billion in value this year.

Critics, such as Prof. Andrew McStay from Bangor University's Emotional AI Lab, caution against overstating Emotional AI's capabilities. McStay argues that emotions are complex and subjective, challenging for AI systems to accurately gauge and interpret. Moreover, ethical concerns abound regarding the potential misuse of Emotional AI, from surveillance and mass manipulation to Orwellian scenarios of emotional surveillance.

A pivotal issue is AI bias, where algorithms reflect the biases inherent in their training data. Prof. Lisa Feldman Barrett of Northeastern University underscores that Emotional AI's promises often exceed its scientific foundation. She notes that while some claim Emotional AI can discern emotions from facial expressions, empirical evidence suggests otherwise, with no consensus on universally identifiable emotional expressions.

The European Union has taken proactive steps with the AI Act of May 2024, prohibiting AI applications that manipulate human behavior and restricting emotion recognition technologies in sensitive spaces like workplaces and schools. This legislative response aims to mitigate potential harms while balancing technological advancements.

In contrast, the United Kingdom lacks specific legislation but has cautioned against the pseudoscientific nature of emotional analysis, as highlighted by the Information Commissioner's Office.

Despite these regulatory efforts, concerns persist over the misuse of Emotional AI for deceptive purposes. Randi Williams from the Algorithmic Justice League warns of potential exploitation for commercial and political gains, reminiscent of past scandals involving data and psychological profiling.

To address ethical challenges, initiatives like the Hume Initiative have emerged, advocating for responsible AI deployment. However, as Emotional AI continues to evolve, ensuring alignment between technological advancements and societal values remains paramount. As Prof. McStay emphasizes, understanding these dynamics is crucial to navigating the complex terrain of Emotional AI ethically.

While Emotional AI holds promise for enhancing user experience and therapeutic applications, its implementation demands rigorous oversight and ethical scrutiny to safeguard against potential misuse and uphold societal trust in technological innovation.