Fujitsu, in collaboration with AI researchers, introduces Fugaku-LLM, a large language model trained on Japan's Fugaku supercomputer.

In response to Asian companies' demand for generative AI solutions aligned with local languages and values, Fugaku-LLM aims to counter the dominance of U.S.-centered language model development.

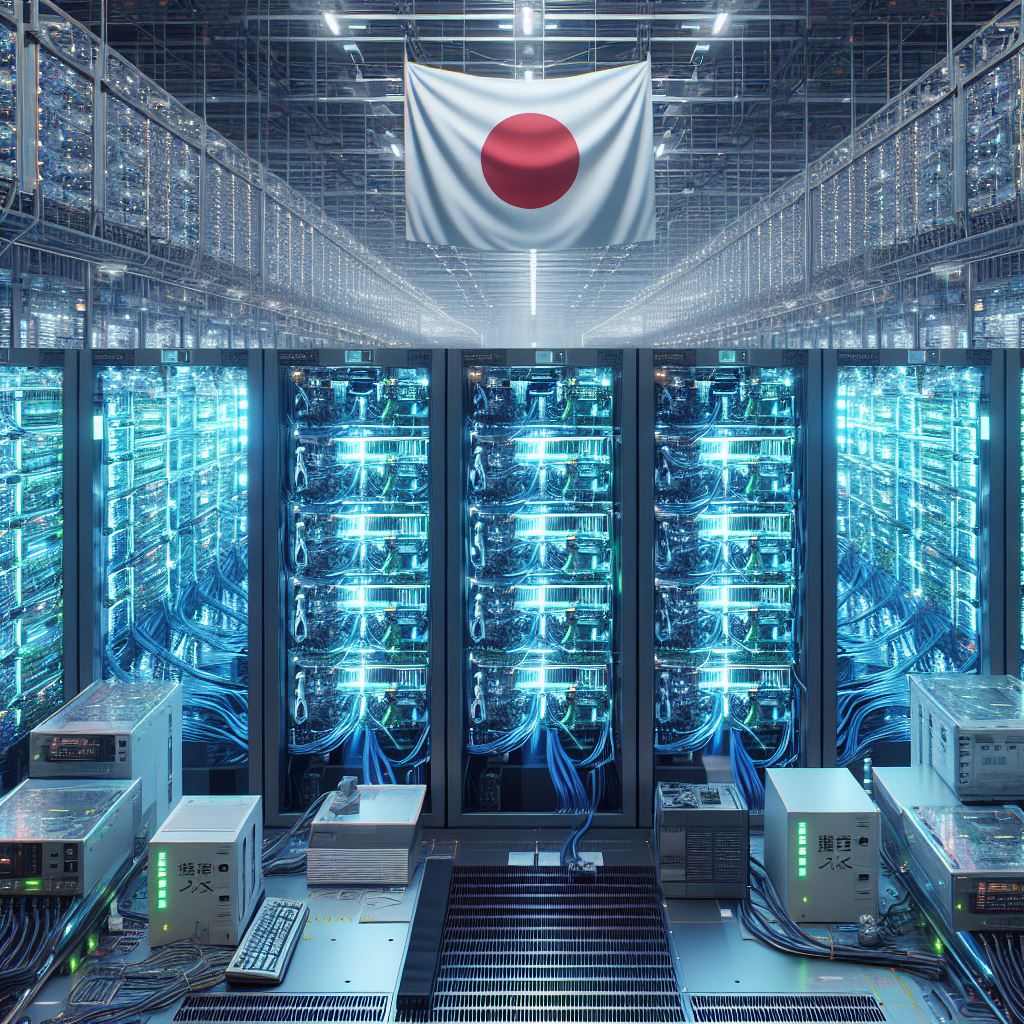

With Japan facing a shortage of computational resources for AI research, the team utilized Fugaku's capabilities. Fugaku, powered by Fujitsu’s A64FX microprocessor, boasts over 160,000 CPUs and was instrumental in training Fugaku-LLM.

Fugaku-LLM, trained on Japanese text predominantly, stands at 13 billion parameters, outperforming previous models like Alibaba Cloud's Qwen-7B and RakutenAI-7B.

Fugaku-LLM excels in natural language processing and dialogue, including the use of honorific language (Keigo). It's available for both commercial and non-commercial applications via platforms like Hugging Face and SambaNova.

Users must adhere to terms of use regarding licensing and are responsible for managing legal and ethical issues arising from the model's use, with no performance or accuracy warranties from developers.

Fujitsu collaborated with Riken supercomputer center, Tokyo Institute of Technology, Tohoku University, and CyberAgent to train Fugaku-LLM. The training involved processing 380 billion tokens of text, math, and code data using Fugaku.

The training process employed distributed training methods optimized for large-scale computing systems like Fugaku, achieving six times faster computation speeds compared to traditional methods.

The development of Fugaku-LLM not only addresses immediate needs but also contributes to Japan's future advantage in AI research. Despite slipping in rankings, Fugaku remains a formidable supercomputing asset outside the U.S.