OpenAI, the creator of ChatGPT, has revealed its strategy for the 2024 global elections, aiming to ensure transparency, accessibility to accurate voting information, and the prevention of AI misuse. The company is dedicated to protecting election integrity, vowing that its AI services will not undermine the electoral process. OpenAI emphasizes the collaborative effort required to secure elections and has established a cross-functional team specifically focused on election-related matters, ready to swiftly address potential abuses.

Key Initiatives:

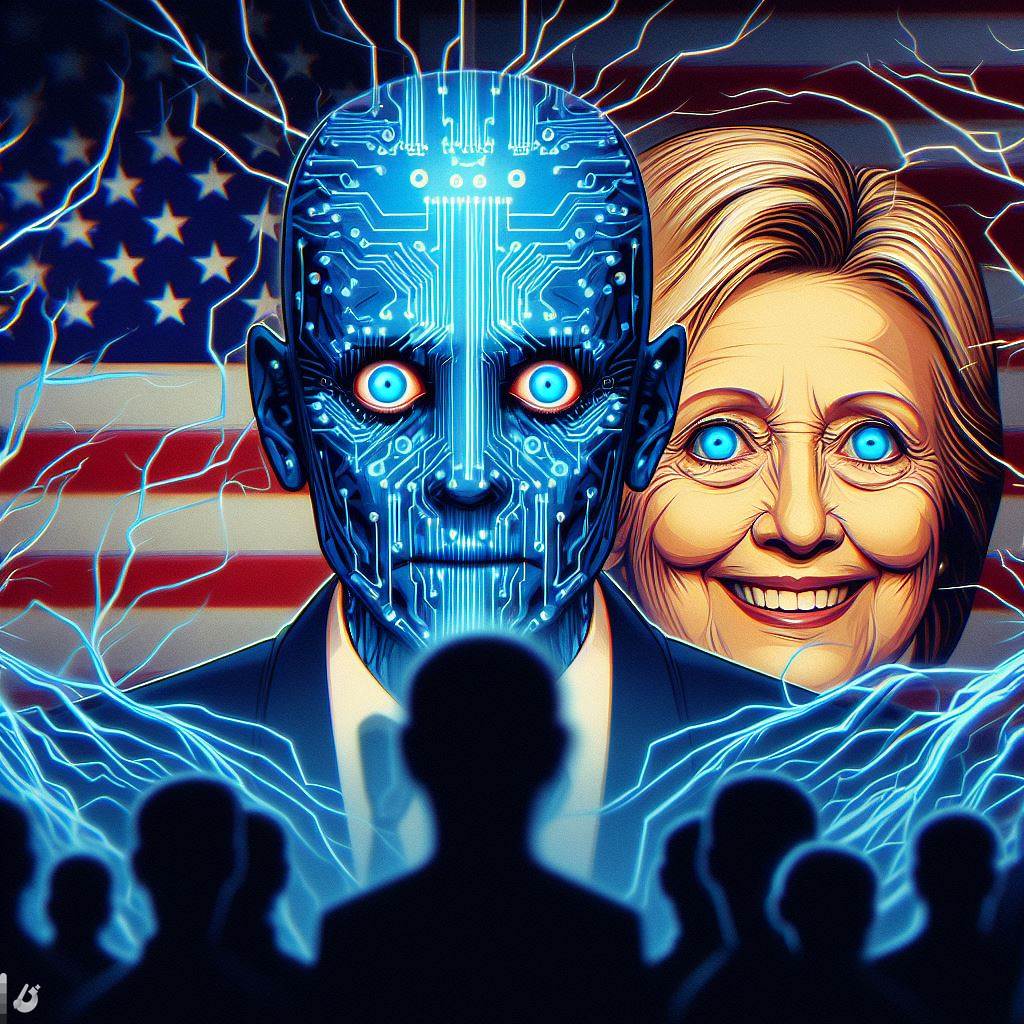

Preventing Abuse: OpenAI defines abuse as misleading deep fakes, chatbots impersonating candidates, or scaled influence operations. The company has implemented measures, such as guardrails on Dall-E, to decline requests for generating images of real people, including political candidates.

Regulating Political Campaigning and Lobbying: OpenAI currently prohibits the development of applications for political campaigning and lobbying to maintain ethical AI usage in the political landscape.

Real-time Information Updates: OpenAI is committed to constantly updating ChatGPT to deliver accurate information sourced from real-time global news reporting. It directs users to official voting websites for additional information.

Industry-Wide Focus on AI and Elections: The influence of AI on elections has sparked discussions, with Microsoft releasing a report on AI's potential to sway voter sentiment on social media. Google has taken proactive steps, making AI disclosure mandatory in political campaign ads and implementing limits on election-related queries in its Bard AI tool.

OpenAI's proactive approach to AI in elections reflects a broader industry trend where tech companies are acknowledging the ethical responsibilities associated with their AI applications in political contexts. The commitment to transparency, prevention of misuse, and continual improvement aligns with the collective effort to safeguard the democratic process from potential threats posed by AI technology.