To use Magic Animate, you need to download the pretrained base models for StableDiffusion V1.5 and MSE-finetuned VAE. Then, you can install the necessary dependencies and activate the conda environment. After that, you can try out the online demos on Huggingface or Replicate. Alternatively, you can use the Replicate API to generate animated videos programmatically.

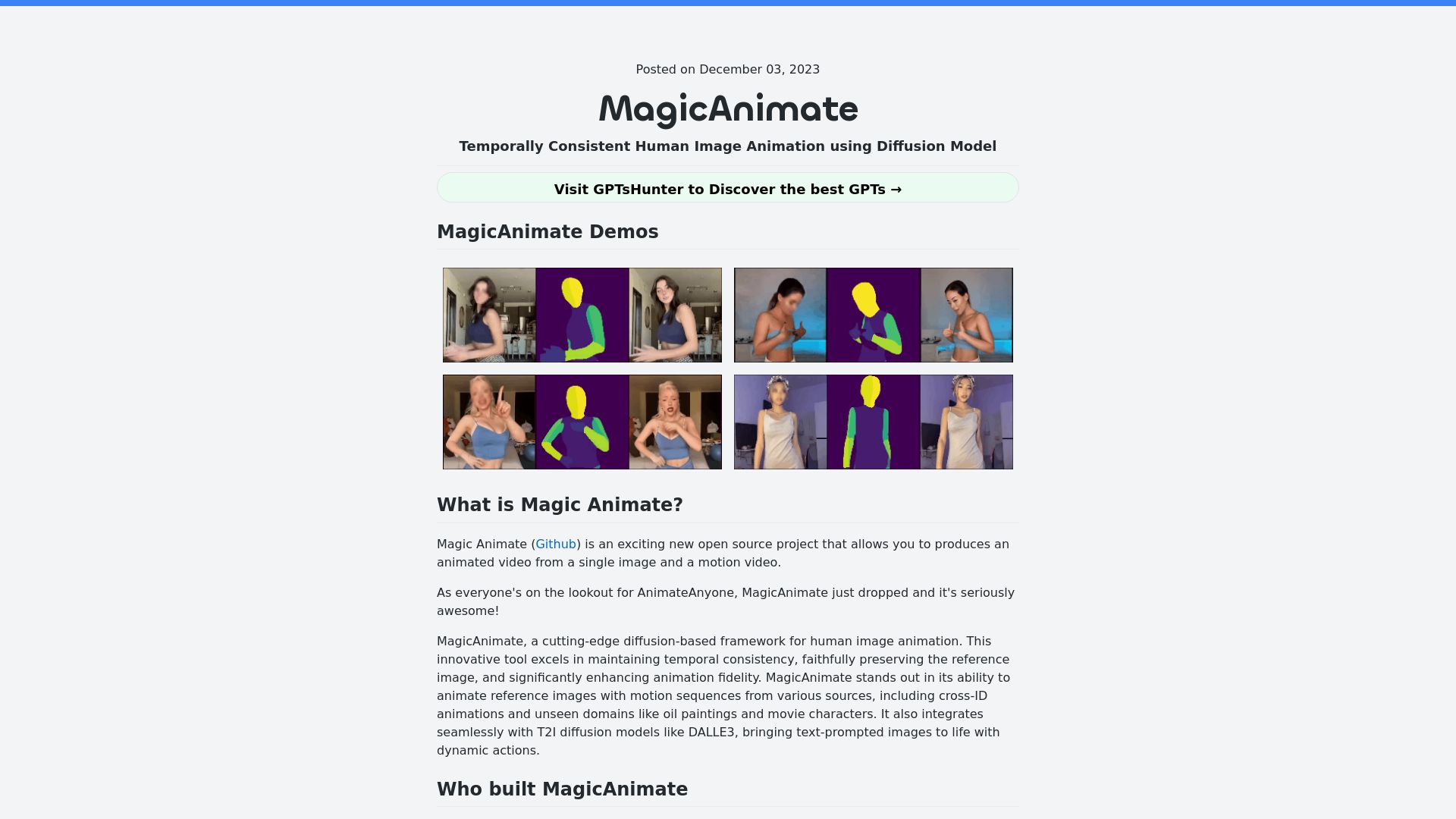

Animation creation from a single image and motion video

Maintaining temporal consistency

Preserving the reference image

Enhancing animation fidelity

Integration with T2I diffusion models like DALLE3

Cross-ID animations and animations from unseen domains

Text-prompted image animation

High consistency among dance video solutions