Demand for 3D worlds and virtual environments is growing exponentially across the world’s industries. 3D workflows are core to industrial digitalization, developing real-time simulations to test and validate autonomous vehicles and robots, operating digital twins to optimize industrial manufacturing, and paving new paths for scientific discovery.

Today, 3D design and world building is still highly manual. While 2D artists and designers have been graced with assistant tools, 3D workflows remain filled with repetitive, tedious tasks.

Creating or finding objects for a scene is a time-intensive process that requires specialized 3D skills honed over time like modeling and texturing. Placing objects correctly and art directing a 3D environment to perfection requires hours of fine tuning.

To reduce manual, repetitive tasks and help creators and designers focus on the creative, enjoyable aspects of their work, NVIDIA has launched numerous AI projects like generative AI tools for virtual worlds.

The iPhone moment of AI

With ChatGPT, we are now experiencing the iPhone moment of AI, where individuals of all technical levels can interact with an advanced computing platform using everyday language. Large language models (LLMs) had been growing increasingly sophisticated, and when a user-friendly interface like ChatGPT made them accessible to everyone, it became the fastest-growing consumer application in history, surpassing 100 million users just two months after launching. Now, every industry is planning to harness the power of AI for a wide range of applications like drug discovery, autonomous machines, and avatar virtual assistants.

Recently, we experimented with OpenAI’s viral ChatGPT and new GPT-4 large multimodal model to show how easy it is to develop custom tools that can rapidly generate 3D objects for virtual worlds in NVIDIA Omniverse. Compared to ChatGPT, GPT-4 marks a “pretty substantial improvement across many dimensions,” said OpenAI co-founder Ilya Sutskever in a fireside chat with NVIDIA founder and CEO Jensen Huang at GTC 2023.

By combining GPT-4 with Omniverse DeepSearch, a smart AI librarian that’s able to search through massive databases of untagged 3D assets, we were able to quickly develop a custom extension that retrieves 3D objects with simple, text-based prompts and automatically add them to a 3D scene.

AI Room Generator Extension

This fun experiment in NVIDIA Omniverse, a development platform for 3D applications, shows developers and technical artists how easy it is to quickly develop custom tools that leverage generative AI to populate realistic environments. End users can simply enter text-based prompts to automatically generate and place high-fidelity objects, saving hours of time that would typically be required to create a complex scene.

Objects generated from the extension are based on Universal Scene Description (USD) SimReady assets. SimReady assets are physically-accurate 3D objects that can be used in any simulation and behave as they would in the real world.

Getting information about the 3D Scene

Everything starts with the USD scene in Omniverse. Users can easily circle an area using the Pencil tool in Omniverse, type in the kind of room/environment they want to generate — for example, a warehouse, or a reception room — and with one click that area is created.

Creating the Prompt for ChatGPT

The ChatGPT prompt is composed of four pieces: system input, user input example, assistant output example, and user prompt.

Let’s start with the aspects of the prompt that tailor to the user’s scenario. This includes text that the user inputs plus data from the scene.

For example, if the user wants to create a reception room, they specify something like “This is the room where we meet our customers. Make sure there is a set of comfortable armchairs, a sofa and a coffee table.” Or, if they want to add a certain number of items they could add “make sure to include a minimum of 10 items.”

This text is combined with scene information like the size and name of the area where we will place items as the User Prompt.

“Reception room, 7x10m, origin at (0.0,0.0,0.0). This is the room where we meet

our customers. Make sure there is a set of comfortable armchairs, a sofa and a

coffee table”

This notion of combining the user’s text with details from the scene is powerful. It’s much simpler to select an object in the scene and programatically access its details than requiring the user to write a prompt to describe all these details. I suspect we’ll see a lot of Omniverse extensions that make use of this Text + Scene to Scene pattern.

Beyond the user prompt, we also need to prime ChatGPT with a system prompt and a shot or two for training.

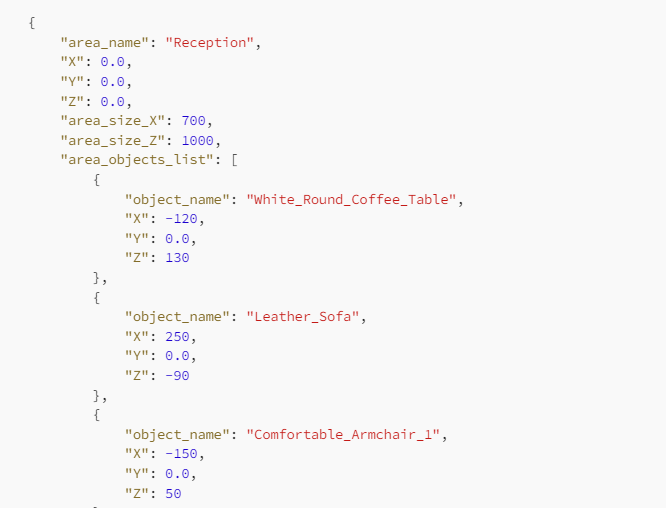

In order to create predictable, deterministic results, the AI is instructed by the system prompt and examples to specifically return a JSON with all the information formatted in a well-defined way, so it can then be used in Omniverse.

Here are the four pieces of the prompt that we will send.

System Prompt

This sets the constraints and instructions for the AI

You are an area generator expert. Given an area of a certain size, you can generate a list of items that are appropriate to that area, in the right place.

You operate in a 3D Space. You work in a X,Y,Z coordinate system. X denotes width, Y denotes height, Z denotes depth. 0.0,0.0,0.0 is the default space origin.

You receive from the user the name of the area, the size of the area on X and Z axis in centimeters, the origin point of the area (which is at the center of the area).

You answer by only generating JSON files that contain the following information:

- area_name: name of the area

- X: coordinate of the area on X axis

- Y: coordinate of the area on Y axis

- Z: coordinate of the area on Z axis

- area_size_X: dimension in cm of the area on X axis

- area_size_Z: dimension in cm of the area on Z axis

- area_objects_list: list of all the objects in the area

For each object you need to store:

- object_name: name of the object

- X: coordinate of the object on X axis

- Y: coordinate of the object on Y axis

- Z: coordinate of the object on Z axis

Each object name should include an appropriate adjective.

Keep in mind, objects should be placed in the area to create the most meaningful layout possible, and they shouldn't overlap.

All objects must be within the bounds of the area size; Never place objects further than 1/2 the length or 1/2 the depth of the area from the origin.

Also keep in mind that the objects should be disposed all over the area in respect to the origin point of the area, and you can use negative values as well to display items correctly, since the origin of the area is always at the center of the area.

Remember, you only generate JSON code, nothing else. It's very important.

User Input Example

This is an example of what a user might submit. Notice that it’s a combination of data from the scene and text prompt.

User Input Example

This is an example of what a user might submit. Notice that it’s a combination of data from the scene and text prompt.

"Reception room, 7x10m, origin at (0.0,0.0,0.0). This is the room where we meet

our customers. Make sure there is a set of comfortable armchairs, a sofa and a

coffee table"

Assistant Output Example

This provides a template that the AI must use. Notice how we’re describing the exact JSON we expect.

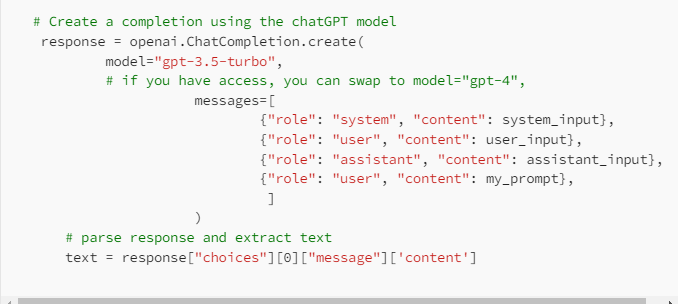

This prompt is sent to the AI from the Extension via Python code. This is quite easy in Omniverse Kit and can be done with just a couple commands using the latest OpenAI

Python Library. Notice that we are passing to the OpenAI API the system input, the sample user input and the sample expected assistant output we have just outlined.

The variable “response” will contain the expected response from ChatGPT.

Passing the result from ChatGPT to Omniverse DeepSearch API and generating the scene