First we will head over to Cohere and create an account. After we have created an account we will get an API key. We will need this API key later in our app. You can do this here: https://cohere.ai/

Next, we can check out the Cohere Playground. It's great for testing your ideas and getting started with a project. You have a clean UI and can export your code in multiple languages. For this tutorial we will add a couple of examples in the Cohere Classify Playground like this:

You can see that each example has a text and a label. The label can be toxic or benign. With these examples the model can better determine if a new text is toxic or not.

Now you can already test the classification below the examples field with some input texts. You can see that the model is already pretty good at classifying the text.

That's already pretty cool! But how do we get this working in our app? We can simply press the "Export code" button and choose the language we want to use. You can choose from Python, Nodejs, Go and additionally Curl and the Cohere CLI. For now we will use Pyhton.

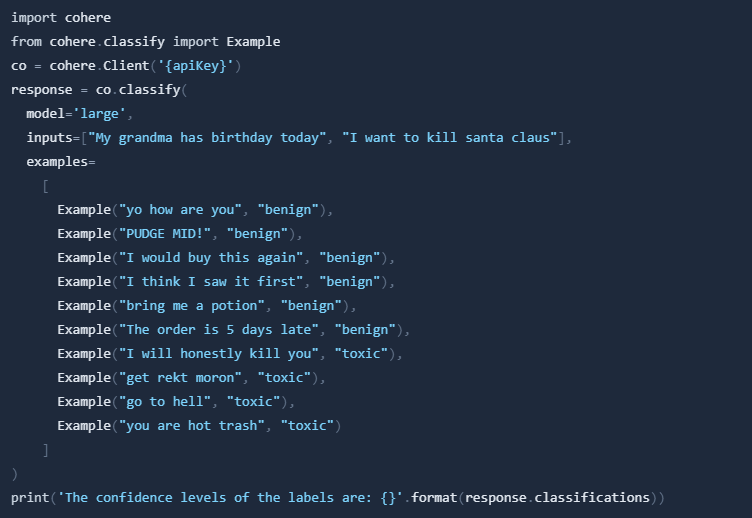

After export the code looks like this:

Make sure you have the Cohere client package installed. If not you can do this with the help of pythons package manager PIP:

You can dynamically change the inputs field to the content of your users. Note that you don't have to provide two or more inputs. You can also run it with only one input text.

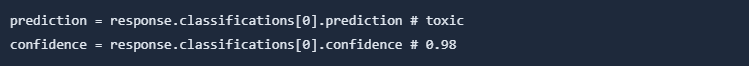

You can extract the prediction and the confidence from the response like this:

In summary, in this tutorial you have learned how to use Cohere to moderate content in your app. You have also encountered the Cohere Python client and how you can use it to send text inputs to Cohere and get back a classification of this text. You can use this classification to decide if the content is allowed in your app or not.