In this tutorial we are going to walk you through the steps to create a Pixar style animated avatar of yourself.

Before we start there are a few basic requirements; which we will list below:

Nvidia GPU with at least 4GB of VRAM

Stable Diffusion Web UI – Download from Github

Stable Diffusion 1.5 checkpoint file

A portrait of yourself or any other image to use

Below are the steps to setup your local environment for the project:

The Stable Diffusion Web UI project should be downloaded to your local disk. You can either clone the Github repository, or download the project as a ZIP file and unzip that into a folder on your local disk. Your file and folder list should like the image below:

Next, we have to move the stable diffusion checkpioint file, which we downloaded from Hugging face into the models\stable-diffusion folder:

Once the file is copied into the models\stable-diffusion folder you must rename it to model.chkpt, as shown in the screenshots below:

Now that we’ve completed the steps above, we are ready to launch the stable diffusion Web UI. We can do this by running the “webui-user.bat” in the stable diffusion project folder, as shown in the image below:

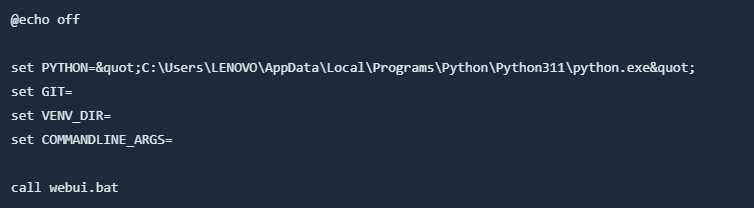

But, before we can do that we have to modify the “webui-user.bat” file to reference our local Python installation. You can edit the “webui-user.bat” file with an editor of your choice, Notepad will also work. The contents of the “webui-user.bat” will look similar to the lines below;

Now that we are all set up with the “webui-user.bat” file we can double-click the file to run it!

Open your Chrome browser and navigate to the following URL http://127.0.0.1:7860 which will launch the Gradio UI. The screen will look something like the image below;

Next we can start playing around with the image-to-image generations.

Under the “img2img” tab, drag and drop a photo of your choice, and adjust the settings that will suit your PC hardware. If you have a good graphics card, like an NVidia RTX3060 with sufficient VRAM, you can push the resolution higher, up to at least 1024x1024.

If you are using a picture of yourself, you can try the following text input prompt;

Pixar, Disney character, 3D render, high quality, smooth render, a girl wearing glasses, black T-shirt, cute smile

Also, remember that the CFG (Classifier Free Guidance) scale and Denoising strength have a significant effect on the final results. Here are a few tips for adjusting the settings to improve the results:

Increasing the Denoising value creates a result that looks less like your original image

The higher the CFG scale, the stricter the Stable Diffusion is instructed to follow the instructions of your prompt, although it does lead to a few anomalies.

For this example, I discovered that the most appropriate value for Denoise strength parameter to be 0.7 and the CFG scale of 11.0. The image below is the final result compared to the original photo.

And that all there is to creating a Disney Pixar styled avatar of yourself, using Stable Diffusion! I encourage you to play around with the settings and use different reference images to see what results you end up with.