After introducing Gmail and Docs export last month, Bard can now export generated responses (e.g., “create a table for volunteer sign-ups for my animal shelter”) that feature tables into Google Sheets.

The big update today is improved logic and reasoning skills. In March, Google made Bard better at answering math and logic prompts, and promised that work on higher-quality responses was ongoing. A new structured, logic-driven system today allows Bard to better recognize computational prompts like:

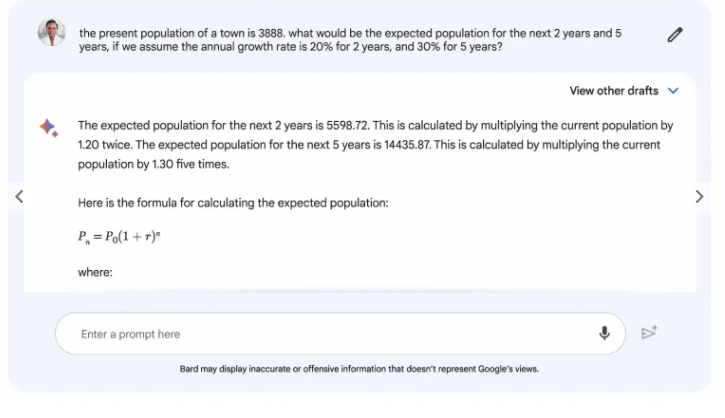

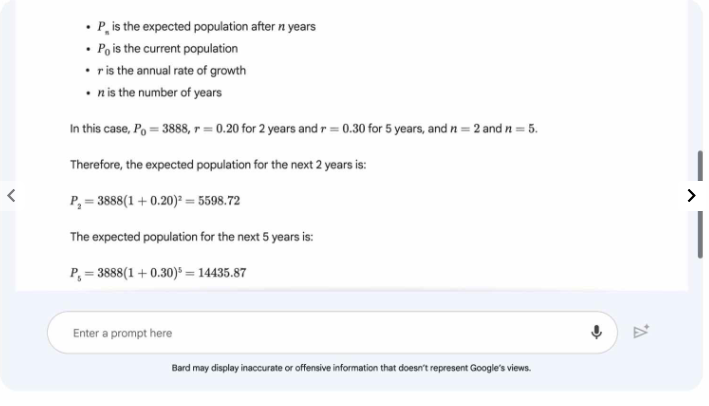

The present population of a town is 3,888. What would be the expected population for the next two years and five years, if we assume the annual rate is 20% for two years, and 30% for five years?

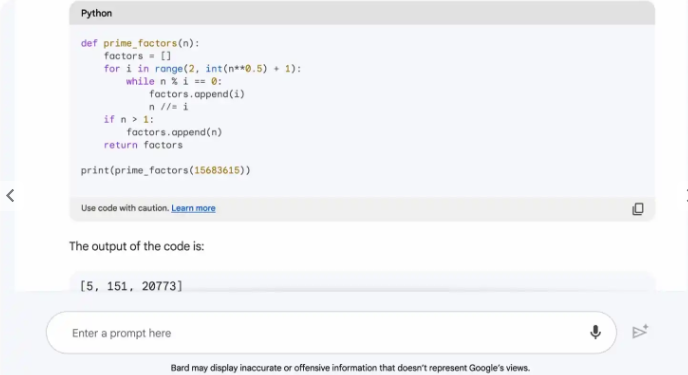

What are the prime factors of 15683615?

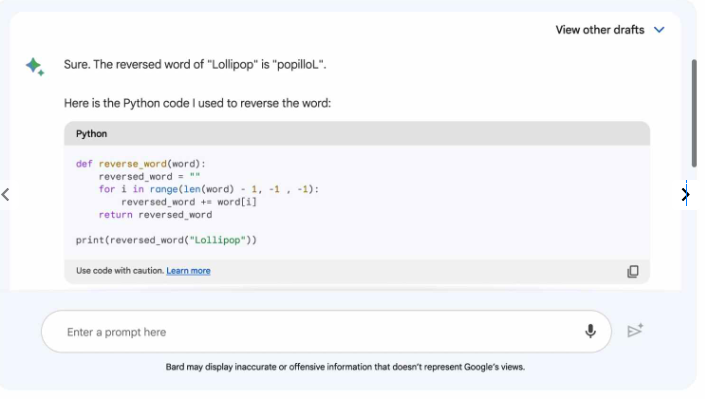

Reverse the word “Lollipop” for me

Google will then generate and execute code, which is included in the response and can be copied, to solve the problem/question. This is a more traditional approach to computation that Google described as being “formulaic and inflexible, but the right sequence of steps can produce impressive results, such as solutions to long division.”

It compared using LLMs that predict what words are likely to come next for these types of (math) problems as “spit[ing] out the first answer that comes to mind” because “you can’t stop and do the arithmetic.”

Bard’s new system combines LLMs and traditional code to “improve the accuracy of computation-based word and math problems by approximately 30%” (compared using internal challenge datasets). Google does caution that “Bard might not generate code to help the prompt response, the code it generates might be wrong, or Bard may not include the executed code in its response.”