You can get access to the Codex waitlist here: https://openai.com/blog/openai-codex/ and getting accepted usually is within a few days.

The idea is to generate a SQL query from a natural language. For example, if we want to get all the users that are older than 25 years old, we can write the following sentence:

Get all the users that are older than 25 years old

And the model will generate the following SQL query:

SELECT * FROM users WHERE age > 25

This enables us to write SQL queries without knowing SQL syntax. This is very useful for non-technical people that want to query a database.

Make sure you got access to Codex. If you don't have access, you can get access to the Codex waitlist here: https://openai.com/blog/openai-codex/ and getting accepted usually is within a few days. Before we write any code, you can do some testing first on the OpenAI playground: https://beta.openai.com/playground. I can really recommend you to do some testing first, because it will help you understand how the model works.

First, we need to install the OpenAI Codex library:

You can find the full documentation of this library here:https://github.com/openai/openai-python

Then, we need to import the library and set the API key

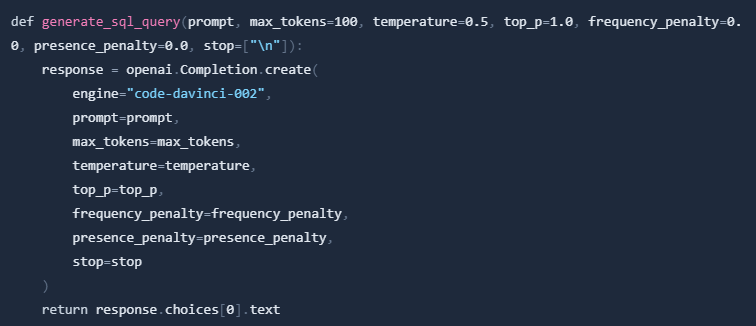

Now, we can start writing some code. We will start by creating a function that will generate a SQL query from a natural language. We will use the openai.Completion.create function to generate the SQL query. We will pass the following parameters:

engine: The engine that will be used to generate the SQL query. We will use davinci-codex for this tutorial.

prompt: The natural language that will be used to generate the SQL query.

max_tokens: The maximum number of tokens that will be generated.

temperature: The temperature of the model. The higher the temperature, the more random the text will be. Lower temperature results in more predictable text.

top_p: The cumulative probability for top-p sampling. 1.0 means no restrictions. Lower top_p results in more random completions.

frequency_penalty: The cumulative probability for top-p sampling. 1.0 means no restrictions. Lower top_p results in more random completions.

presence_penalty: The cumulative probability for top-p sampling. 1.0 means no restrictions. Lower top_p results in more random completions.

stop: The sequence of tokens that will stop the generation.

Now, we can test our function. We will use the following prompt:

Get all the users that are older than 25 years old

The model will generate the following SQL query:

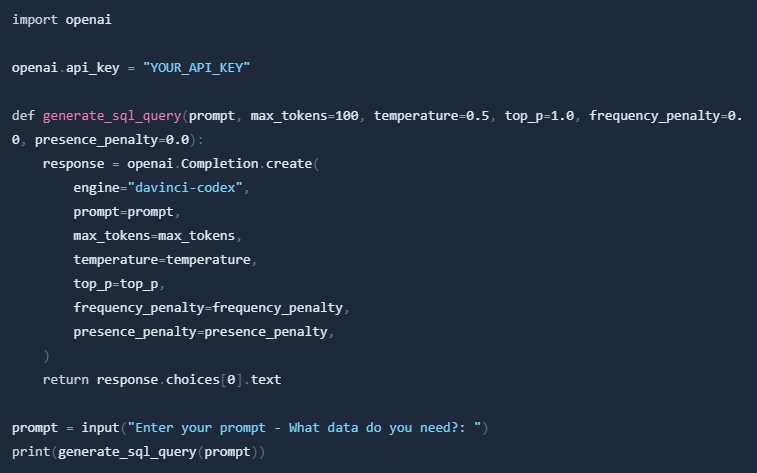

Now we put it all together in one file and add input via the console.

Now run the file and enter your prompt and get back the SQL query.

In this tutorial, we learned how to generate a SQL query from a natural language using OpenAI Codex. We also learned how to use the OpenAI Codex library. You can continue improving this project by adding a database and a web interface. You can also add more prompts to the request to guide the model into the right direction and make it more accurate. You could also add your database schema in the prompt to make it more accurate. For example like this: