The GitHub user AUTOMATIC1111 has created a Stable Diffusion Web Interface you can use to test the model locally. This will help you with testing and validating ideas. It is based on Gradio, a Python library for building UI components.

Original txt2img and img2img modes

One click install and run script (but you still must install python and git)

Outpainting

Inpainting

Color Sketch

Prompt Matrix

Stable Diffusion Upscale

Attention, specify parts of text that the model should pay more attention to

Extras tab with:

GFPGAN, neural network that fixes faces

CodeFormer, face restoration tool as an alternative to GFPGAN

RealESRGAN, neural network upscaler

ESRGAN, neural network upscaler with a lot of third party models

SwinIR and Swin2SR(see here), neural network upscalers

LDSR, Latent diffusion super resolution upscaling

Resizing aspect ratio options

Random artist button

Styles, a way to save part of prompt and easily apply them via dropdown later

Variations, a way to generate same image but with tiny differences

and much much more...

How to use the Web Interface?

Windows

1. Install Python 3.10.6, checking "Add Python to PATH"

2. Install git.

3. Download the stable-diffusion-webui repository, for example by running git clone https://github.com/AUTOMATIC1111/stable-diffusion-webui.git.

4. Place model.ckpt in the models directory (see dependencies for where to get it).

5. (Optional) Place GFPGANv1.4.pth in the base directory, alongside webui.py (see dependencies for where to get it).

6. Run webui-user.bat from Windows Explorer as normal, non-administrator, user.

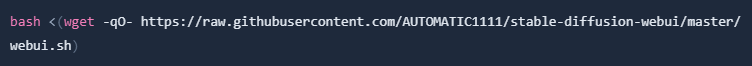

Linux

1. Install the dependencies:

2. To install in /home/$(whoami)/stable-diffusion-webui/, run: