For those of us who have followed the development of AI for much of its growth as a recognized research field (with that name) since it began way back in 1956, more recent efforts to achieve a useful tool using Large Language Models (ChatGPT is such) seemed doomed to fail, being essentially just word autocomplete on steroids. We were all (even the ChatGPT developers) surprised at just how “convincing” the results are.

Indeed, even those of us who have spent considerable time studying research on sociolinguistics were surprised, though that background resulted in our initial surprise being tempered by the appreciation that the “Wow!” factor landed on our human capacity for language, not the machine’s. We are so fluid in our use of language that our instinct is to implicitly (and dangerously, as it turns out) ascribe intelligent agency to a system we can interact with as we do with ChatGPT; indeed without any conscious effort or awareness, we compensate for many errors the system makes, just as we do when interacting with another person.)

And therein lies the secret to the illusion, for illusion is what it is. The “intelligence” lies with us, not the computer program we are interacting with. As has been the case since the start of AI, what those systems do when we interact with them is provide not AI but IA – intelligence augmentation. (Autonomous AI systems are something else; that’s a whole separate topic.) Once you realize that, it seems clear that the initial fear of mathematics educators that such packages will make is super easy for students to cheat the system by simply asking ChatGPT to answer their homework questions for them, is simply not justified.

I gave one example of just how off-the-wall-incorrect an answer ChatGPT can give in my May post.

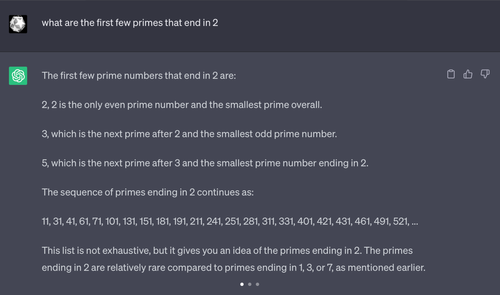

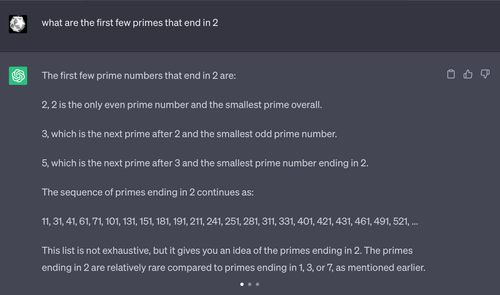

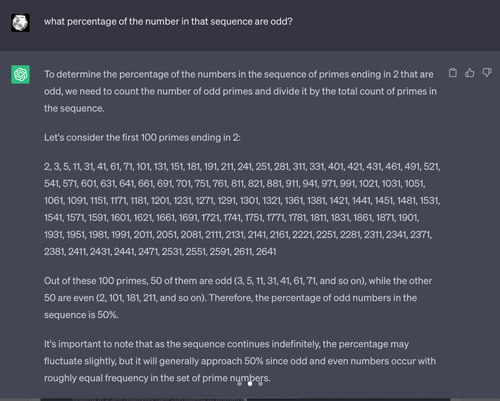

Another striking fail that caught my attention was the ChatGPT answer to a simple question about prime numbers shown here, which is from a Mastadon post on June 23.

It’s worth scrolling down that Mastadon thread to see just how totally crazy ChatGPT’s responses were. I include two further screenshots in this post.

The point is, a LLM like ChatGPT generates text by predicting the most likely next character (or word, or whatever unit of language the system designer chooses), based on a search of a large corpus of text. There is no notion of truth or accuracy built in. It really is just a form of auto-complete.

That approach guarantees the result will be fluent text. It also means that a lot of the time the output you get will be correct, since the bulk of the texts in the large search corpus ChatGPT has access to (and was trained on) will surely be correct. But since the LLM operates at the level of syntax, you’re never going to get an output you can be sure is correct. We users have to provide the required reality check. So a teacher need not fear that a novice will be able to ace the homework assignment by copy-pasting from ChatGPT. (English and history teachers have more of a problem.)

But this is where it gets interesting. What happens when students, and we, having at least some prior knowledge of a domain (mathematics in our case), and, knowing how a LLM works, decide to start using one? How can we make profitable use of this technology under those circumstances?

One approach is to couple a LLM with a computer system that was built to solve math problems? I looked at this possibility in last month’s post, when I described how ChatGPT with a Wofram Alpha plug-in scored 96% on a UK Maths A-level paper, the exam taken at age 18 at the end of high school that is a crucial metric for university entrance.

I am sure we are going to see many such uses of LLM’s coupled with back-end systems built to perform specific tasks in various domains. As such, the LLMs will provide user-friendly front-ends to leverage the power of those tools without the need to master their controls.

[With Wolfram Alpha, its interface is extremely easy to use, so to my mind there’s no need for any reader of MAA blogs to use an LLM interface; but the same is not the case for Wolfram’s Mathematica, which has a more challenging learning curve.]

The fact it, we humans, being highly resourceful, will surely find many ways to take advantage of what LLMs offer.

For example, I recently used ChatGPT to generate the “comparative titles” list to send to potential publishers of my next book, asking the program to list the ten most successful books over the past ten years that had various keywords in common with mine (among them “algebra”, “history”, and “popular science”). The resulting list was almost perfect. I deleted one entry that was a much older book with a newer edition, and put one in that I knew about which the program (to my surprise) had not listed. I also checked all titles ChatGPT listed with the entries on Amazon.com to make sure they were actual books, not made-up ones (which ChatGPT famously does all the time).

As an experiment of another possible use, I also asked ChatGPT to give me a summary of the field I worked in for my Ph.D. and the first half of my career: set theory.

I started by asking it “Who were the ten most influential set theorists of the past seventy years?” It gave me six names I entirely agreed with and four whose work predated that 70-year window, but whose work could still be said to remain influential.

On the other hand, it completely missed the set theorist of that era who dominated the field, Robert Solovay. (It did list Paul Cohen; I had set the window at 70 years specifically to make it the post-Cohen era. But after solving the Continuum Problem in 1963, Cohen all but left the field, whereas Solovay was for many years by far the most dominant force.)

I did follow up by asking ChatGPT “Give me your reason for not including Robert Solovay in that list” and it provided a groveling apology, and proceeded to list what it “thought” were his most significant results, but in a BS-ing way that was no more convincing than a student who had done a quick, last-minute Google search, having failed to do the assigned reading. It did not correctly list Solovay’s most important results.

Still, my experiment was enough to confirm my expectation that I could use a LLM to provide a place to start when setting out on a new project (maybe a book project), or when back-filling a summary history to provide context for an article I am writing. Combined with Google and Wikipedia to provide initial checks for accuracy (both those sources are themselves flawed, of course), it could save me a lot of time. But then I’m a user with many years familiarity with the things I work on. [ChatGPT never landed on anything of genuine significance about Solovay, even after I had given the system his name.]

I ran a few more tests (including questions about me), and they all came out similarly, getting some stuff right, missing other things, and getting some things plain wrong. (Pressing it for titles of books or papers I had written on certain topics, it just made stuff up, often using words that did occur in titles of books I have written, or in titles of books written by people I had collaborated with.)

For a person familiar with the domain (ideally, a domain expert), therefore, an LLM looks like a useful tool. For a novice to the domain, on the other hand, it looks like a recipe for disaster; better to look out a human expert – but don’t ask the LLM for recommendations, use keyword searches on Google, or even better turn to your human network.

And if you are a (math) teacher worried about students using ChatGTP to ace the test, if they have enough domain knowledge to make effective use of that tool, they have already demonstrated proficiency (provided it is a test of thinking ability). Of course, if they have ChatGPT with the Wolfram Alpha plug-in, standard tests are toast.