Our goal is to make a video using interpolation process. We will use the Stable Diffusion model to generate images and then we will use them to make a video. Fortunately, we don't have to write the code ourselves for interpolation between latent spaces. We will use stable_diffusion_videos library. If you want to know exactly how it works under the hood, feel free to explore the code on Github. If you need help - ask a question on the channel dedicated to this guide. You will find it on our discord!

To run this tutorial we will use Google Colab and Google Drive

First of all, you need to install all dependencies and connect our Google Drive with Colab - to save movie and frames. You can do it by running:

and then:

Next step is authentication with Hugging Face. You can find your token here.

To generate video, we need to define prompts between which model will interpolate. We will use a dictionary for it:

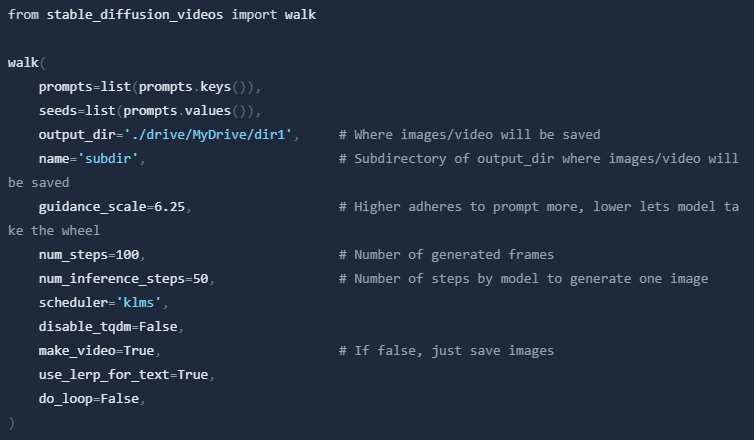

Now we are able to generate images/video using:

This process may take a long time, depending on passed parameters.

There are some descriptions of parameters, but if you want to know more - check stable_diffusion_videos code! I use 100 steps between prompts, but you can use more for better results. You can also modify num_inference_steps and other parameters. Feel free to experiment! After running this code, you will find the video in your Google Drive. You can download it and watch and share with your friends!

If you want to reproduce my results - just copy and paste code below, but I recommend to use your own prompts and experiment with model - it's worth it!

You can use more than two prompts. Example: