GPT-3 is a language model from OpenAI that can generate text. It is trained on a large dataset of text from the web.

If you don't have it already please go to OpenAI and create an account. And create your API key. Never share your API key in public repository!

We are adding openai package to our file

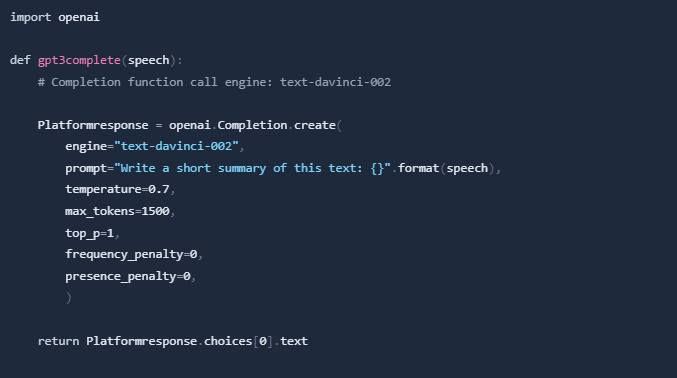

We will create a new file called gpt3.py and add the following code to it. In the prompt I was using the summary option to summarize the text, but you can use anything you want. And you can tweak the parameters as well.

On the top we will update our imports. Instead of "MY_API_KEY" please insert the API key you created earlier.

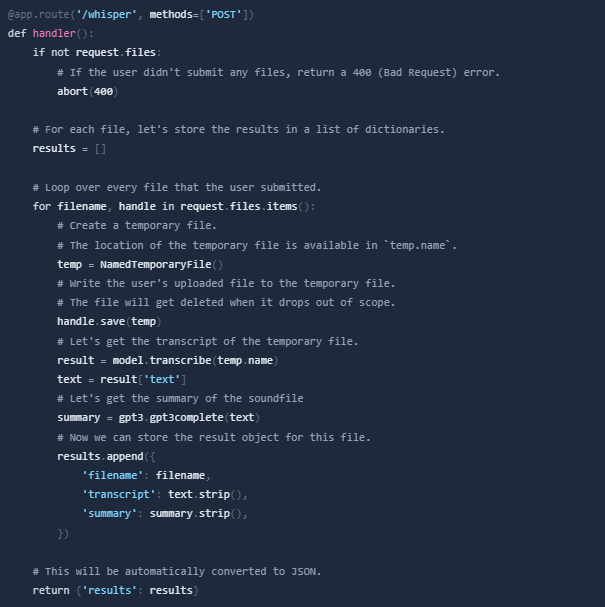

We will integrate our new GPT3 function into the route. So when we are getting the result from whisper we will pass it to the gpt3 function and return the result.

1. Open a terminal and navigate to the folder where you created the files.

2. Run the following command to build the container:

3. When built is ready, run the following command to run the container:

1. You can test the API by sending a POST request to the route http://localhost:5000/whisper with a file in it. Body should be form-data.

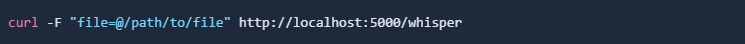

2. You can use the following curl command to test the API:

3. In result you should get a JSON object with the transcript and summary in it.

This API can be deployed anywhere where Docker can be used. Just keep in mind that this setup currently using CPU for processing the audio files. If you want to use GPU you need to change Dockerfile and share the GPU. I won't go into this deeper as this is an introduction. Docker GPU